Browse Source

添加文档切边项目

46 changed files with 2896 additions and 0 deletions

+ 8

- 0

.gitignore

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 201

- 0

Dewarp/LICENSE

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

File diff suppressed because it is too large

+ 93

- 0

Dewarp/README.md

+ 68

- 0

Dewarp/corner_data_generator.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 40

- 0

Dewarp/csv_convert.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 4

- 0

Dewarp/data.csv

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

+ 67

- 0

Dewarp/data_augmentor/augmentData.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 63

- 0

Dewarp/data_augmentor/cornerData.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 32

- 0

Dewarp/data_augmentor/label.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 21

- 0

Dewarp/data_augmentor/testData.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 4

- 0

Dewarp/dataprocessor/__init__.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

+ 117

- 0

Dewarp/dataprocessor/dataloaders.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 207

- 0

Dewarp/dataprocessor/dataset.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 19

- 0

Dewarp/dataprocessor/datasetfactory.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 21

- 0

Dewarp/dataprocessor/loaderfactory.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 24

- 0

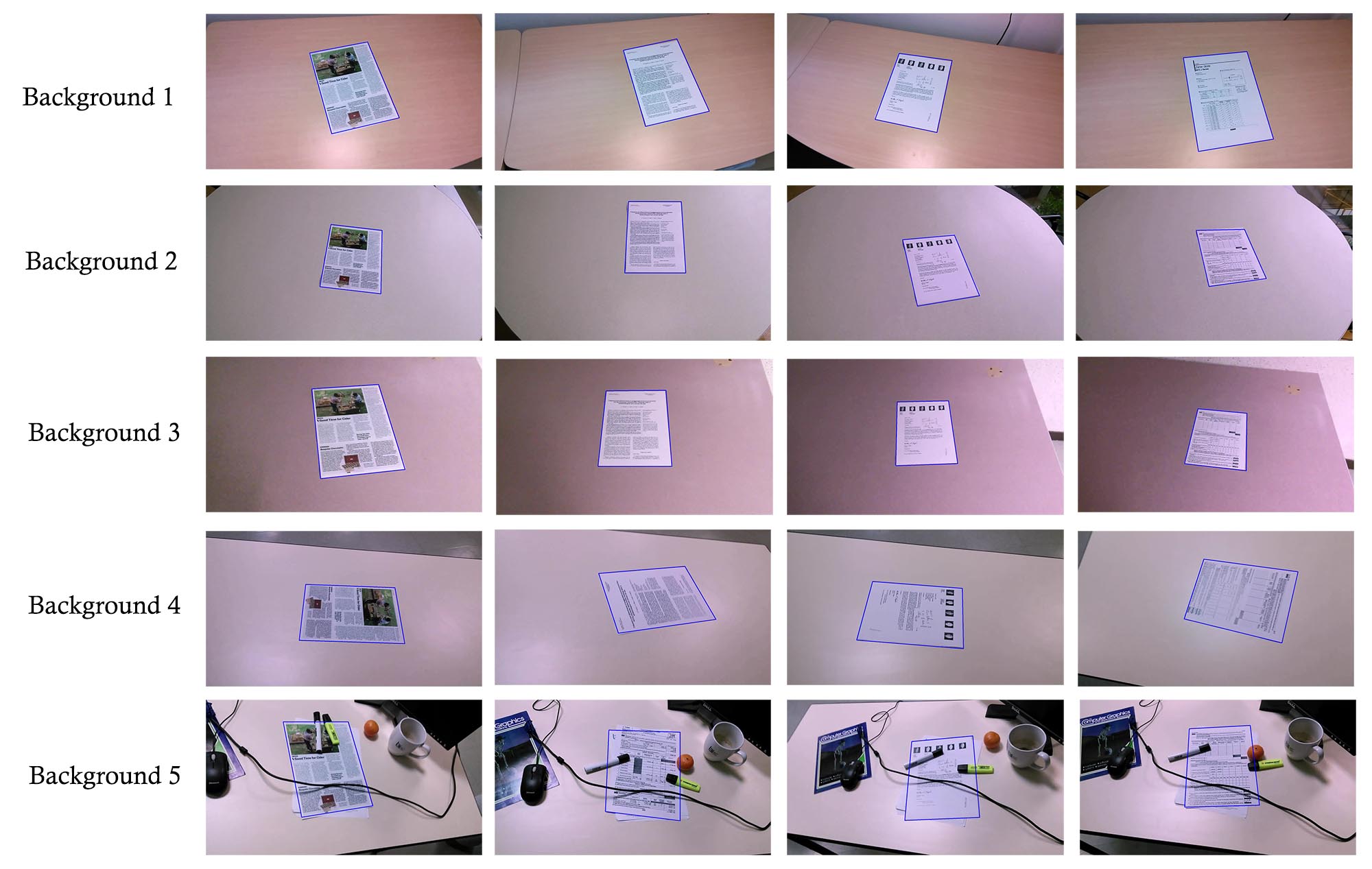

Dewarp/dataset_intro.md

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 72

- 0

Dewarp/demo.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 72

- 0

Dewarp/document_data_generator.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 69

- 0

Dewarp/evaluate.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 6

- 0

Dewarp/evaluation/__init__.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 72

- 0

Dewarp/evaluation/corner_extractor.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 84

- 0

Dewarp/evaluation/corner_refiner.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 0

Dewarp/experiment/__init__.py

|

|||

|

|

||

+ 53

- 0

Dewarp/experiment/experiment.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 28

- 0

Dewarp/json_to_csv.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 0

Dewarp/model/__init__.py

|

|||

|

|

||

+ 78

- 0

Dewarp/model/cornerModel.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 37

- 0

Dewarp/model/modelfactory.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 37

- 0

Dewarp/model/res_utils.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 184

- 0

Dewarp/model/resnet32.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 1

- 0

Dewarp/plotter/__init__.py

|

|||

|

|

||

+ 88

- 0

Dewarp/plotter/plotter.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 10

- 0

Dewarp/pytorch2onnx.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 19

- 0

Dewarp/pytorch2paddle.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

BIN

Dewarp/results/qualitativeResults.jpg

+ 41

- 0

Dewarp/smartdoc_data_processor/video_to_image.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 157

- 0

Dewarp/train_model.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 149

- 0

Dewarp/train_seg_model.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 2

- 0

Dewarp/trainer/__init__.py

|

|||

|

|

||

|

|

||

+ 63

- 0

Dewarp/trainer/evaluator.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 111

- 0

Dewarp/trainer/trainer.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 2

- 0

Dewarp/utils/__init__.py

|

|||

|

|

||

|

|

||

+ 114

- 0

Dewarp/utils/colorer.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 296

- 0

Dewarp/utils/utils.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 40

- 0

Dewarp/video_to_images.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||